How the Birthmark Standard protects photographer privacy while enabling verification

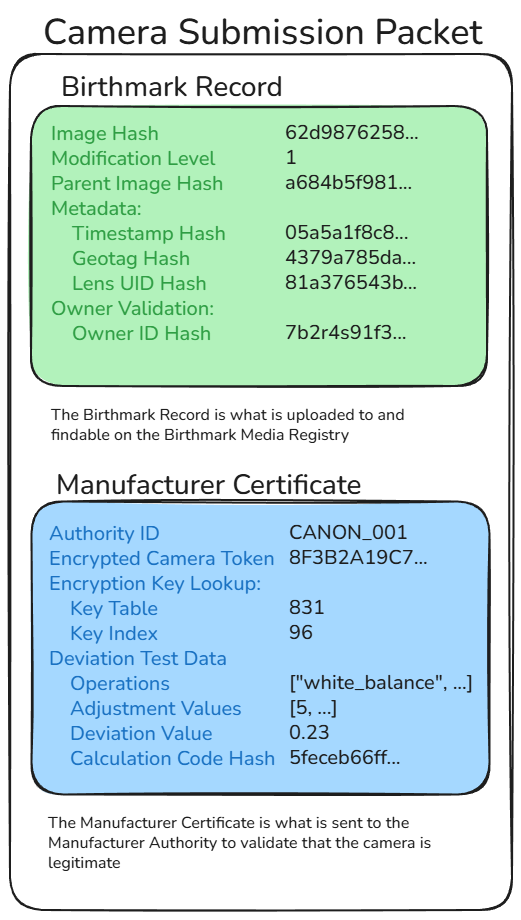

When a camera authenticates an image, it creates two separate data structures that serve different purposes and go to different recipients. This architectural separation ensures no single party has complete information.

The Birthmark Record goes to the blockchain for public verification. It contains:

The Manufacturer Certificate goes only to the camera manufacturer for validation. It contains:

The manufacturer can identify which specific camera authenticated but never sees the image hashes or content. The blockchain stores image hashes but never receives camera identification data.

The core privacy mechanism: authentication creates two separate data structures sent to different parties that are never combined in any public record.

Result: To connect a specific camera to a specific image requires compromising multiple independent systems with opposing incentives. The manufacturer can identify the camera but never sees image hashes. The submission server sees image hashes but cannot identify which specific camera without manufacturer keys.

Each camera is randomly assigned 3 key tables (out of 2,500 total). Each table is shared by thousands of cameras. When authenticating, the camera randomly selects one of its 3 assigned tables.

Result: Submission servers and public verifiers cannot identify which specific camera authenticated an image—only the manufacturer can potentially identify the device through their private validation process, but they never see what was authenticated.

Image content is never transmitted to any server or stored anywhere. Only SHA-256 hashes are submitted to the blockchain.

Result: Complete privacy of image content. Hash collisions are computationally infeasible (2^256 possibilities).

All timestamps recorded on the blockchain are rounded up to the nearest minute. This creates anonymity sets where all submissions within the same minute receive identical timestamps.

Result: Even if an adversary observes submission timing patterns, they cannot distinguish individual images authenticated within the same 60-second window. This prevents timing-based correlation attacks and protects photographers who authenticate multiple images in quick succession.

Timestamp, GPS coordinates, lens ID, and owner ID are hashed before being included in the Birthmark Record. These features are opt-in and disabled by default.

Owner ID Hash Salt: The owner_hash uses a unique random salt stored in the image's EXIF metadata. This prevents correlation attacks across multiple images—each image from the same photographer produces a different owner_hash, making it impossible to link images by owner ID alone.

Result: Photographers can prove metadata authenticity without revealing location or identity unless they choose to share the original metadata alongside the image. Hashes confirm information you already have but never reveal information you don't possess.

Information is distributed across multiple independent parties so each sees only non-identifying fragments:

Result: Combining the fragments requires cooperation from entities with opposing incentives. No single point of failure or control.

The system protects journalistic sources through architectural design, not just policy. To connect a specific camera to a specific image requires:

Result: Fishing expeditions are prevented. Authorities cannot browse the blockchain to identify photographers. Even with complete system access, adversaries can only connect cameras to images they already possess—they cannot predict future images or identify what new images depict.

Design Philosophy: The Birthmark Standard proves an image came from a legitimate camera sensor, not AI generation. It does not prove the scene is real, only that a physical camera captured it. Truth verification still requires journalistic judgment and context.